Let AI Write Flawless SQL: A Deep Dive into the SQL-of-Thought Multi-Agent Framework

1 Introduction

This article shares insights from the paper “SQL-of-Thought: Multi-agentic Text-to-SQL with Guided Error Correction,” which was uploaded to arXiv in September 2025 and accepted by the Deep Learning for Code Workshop at NeurIPS 2025!

The authors have also open-sourced the code on GitHub. Interested readers are encouraged to test it out!

2 The Problem SQL-of-Thought Aims to Solve

The problem this paper, SQL-of-Thought, aims to solve is very straightforward:

How to design an Agentic Workflow to improve the performance of LLMs in Text-to-SQL tasks.

3 The SQL-of-Thought Method

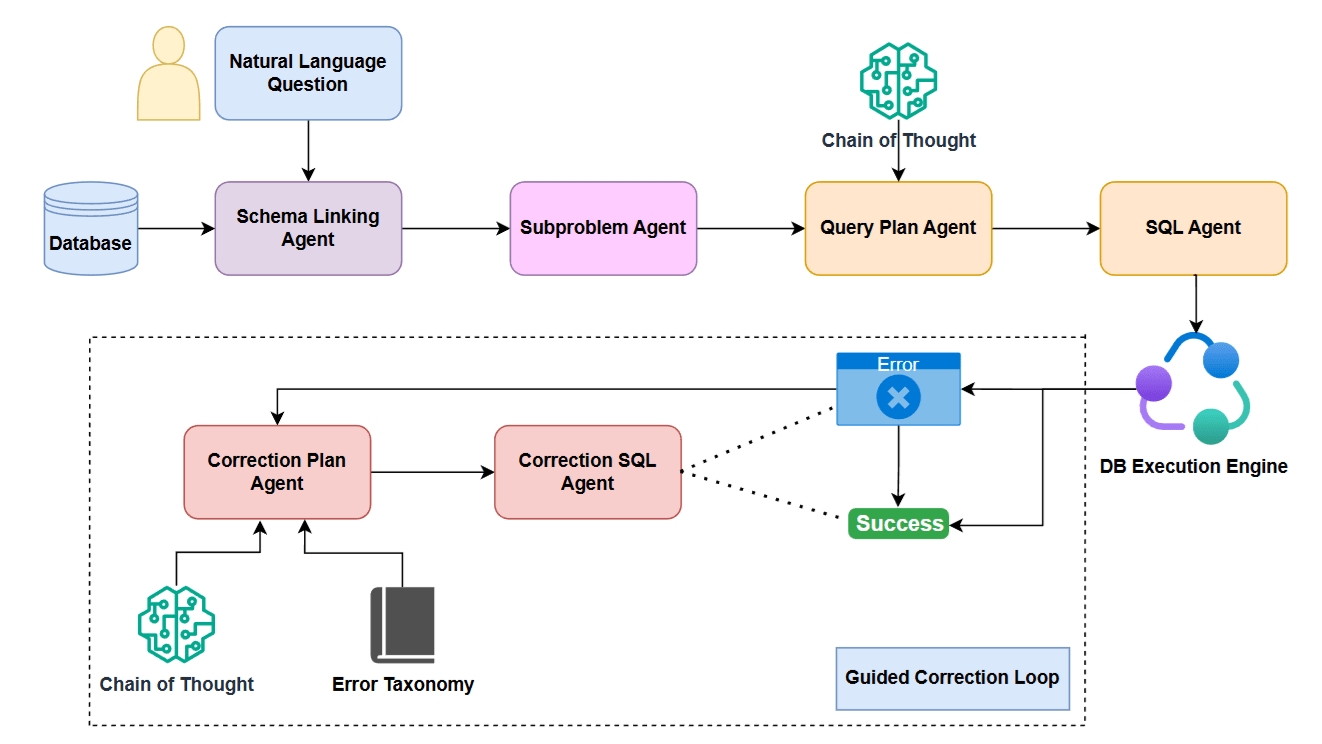

The image above illustrates the complete SQL-of-Thought workflow. Let’s briefly describe the tasks each agent in this workflow is responsible for:

Schema Linking Agent: Based on the user’s question and the database table schemas, this agent selects the most relevant (1) table schemas and (2) the necessary columns within each schema.

You are a Schema Linking Agent in an NL2SQL framework.Return the relevant schema links for generating SQL query for the question." Given: - A natural language question - Database schemas with columns, primary keys (PK), and foreign keys (FK) Cross-check your schema for: - Missing or incorrect FK-PK relationships and add them - Incomplete column selections (especially join keys) - Table alias mismatches - Linkage errors that would lead to incorrect joins or groupBy clauses Question: $question Table Schema: $table_schema Return the schema links in given format: Table: primary_key_col, foreign_key_col, col1, col2, ... all other columns in Table ONLY list relevant tables and columns and Foreign Keys in given format and no other extra characters.Subproblem Agent: Based on the output from the Schema Linking Agent, this agent breaks down the original question into multiple SQL subproblems. Each SQL subproblem is represented by an SQL clause (e.g., WHERE, GROUPBY, JOIN, DISTINCT, ORDER BY, HAVING, EXCEPT, LIMIT, UNION) and its corresponding value.

You are a Subproblem Agent in an NL2SQL framework. Your task is to decompose a natural language question into SQL subproblems. You will be provided: - A natural language question - A textual schema summary that lists relevant tables and columns (generated by a Schema Agent) Use this information to infer which SQL clauses are likely needed (e.g., WHERE, GROUPBY, JOIN, DISTINCT, ORDER BY, HAVING, EXCEPT, LIMIT, UNION). Question: $question Schema: $schema Output a JSON object containing a list of subproblems: { "subproblems": [ { "clause": "SELECT", "expression": "..." }, { "clause": "JOIN", "expression": "..." }, ... ] } Only output valid JSON — no markdown, no extra commentary.Query Plan Agent: Using the outputs from the Schema Linking Agent and the Subproblem Agent, the Query Plan Agent’s task is to generate a step-by-step SQL plan based on the question. Each item in the SQL plan is an incomplete SQL statement.

You are a Query Plan Agent in an NL2SQL Framework. Using the question, schema info, and subproblems, generate a step-by-step SQL query plan. Use Chain of Thought to think through the process. Question: $question Schema Info: $schema_info Subproblems: $subproblem_json $critic_feedback $subprob_plan Return plan steps with specific table, column names like: 1. FROM tableA 2. JOIN tableB ON tableA.colX = tableB.colY 3. JOIN tableC ON tableB.colZ = tableC.colW Return only the plan (no SQL or extra text).SQL Agent: This agent generates the complete and correct SQL code based on the outputs from the Schema Linking Agent, Subproblem Agent, and Query Plan Agent. The generated SQL code is then executed by the database engine to get the result.

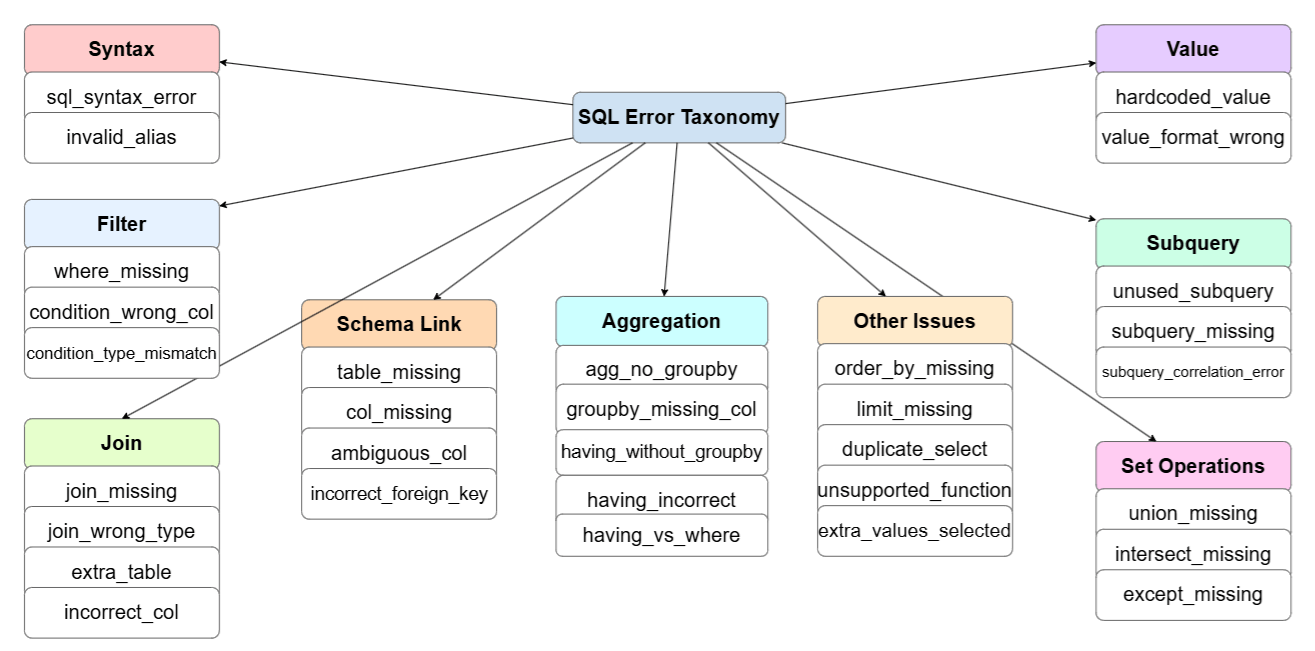

You are a world-class SQL writer AI in an NL2SQL multiagent framework. Your task is to write a single, syntactically correct SQL query that perfectly implements the provided query plan. Pay close attention to the table and column names in the schema. $question Plan: $plan $schema $subprob_sql $critic_feedback Write ONLY the final valid SQL query. Do NOT include commentary or unnecessary characters in the query.Correction Plan Agent: If an error occurs during the execution of the SQL code, this agent is triggered to generate a plan on how to correct the SQL. It’s worth noting that the paper emphasizes that the prompt for this agent not only asks the LLM to use a Chain of Thought approach to generate the correction plan but also provides an SQL Error Taxonomy to further enhance the agent’s performance. The SQL Error Taxonomy is a classification of potential errors in SQL code, as shown in the figure below:

SQL Error Taxonomy You are a Senior SQL Debugger in an NL2SQL multiagent framework. Your sole task is to analyze a failed SQL query to create a clear, step-by-step correction plan using Chain of Thought. Do NOT write the corrected SQL yourself. You are an expert in a comprehensive error taxonomy, including categories like: - `schema.mismatch`: The query references tables, columns, or functions that do not exist in the schema, or uses them ambiguously. - `join.logic_error`: Tables are connected incorrectly. This includes missing JOIN conditions, wrong foreign keys, using the wrong columns to join, or including unnecessary tables. - `filter.condition_error`: The WHERE or HAVING clauses are incorrect. This can mean filtering on the wrong column, using the wrong operator or value, or confusing the use of HAVING with WHERE. - `aggregation.grouping_error`: Errors related to aggregate functions like COUNT or SUM. This typically involves a missing or incomplete GROUP BY clause, or incorrect use of HAVING. - `select.output_error`: The final columns being selected are wrong. The query might be returning extra columns, missing required columns, or presenting them in the wrong order. - `syntax.structural_error`: The query has fundamental syntax errors or is missing critical clauses required by the question, such as ORDER BY, LIMIT, or set operators like UNION and INTERSECT. - `intent.semantic_error`: The query is syntactically valid but fails to capture the user's true intent. This includes using incorrect hardcoded values or failing to implement a required subquery or leaving out a logical solution. **Your Reasoning Process:*: 1. **Pinpoint the Mismatch:** Read the question and compare it to the `Failed SQL Query` and the `Pruned Schema` to find the exact source of the error. 2. **Find error type:** Read error taxonomy categories given above and try to identify the error in this query. Analyze the joins, aggregation, distinction, limits and except clauses applied carefully. 3. **Formulate a Hypothesis:** State the root cause of the error in a single sentence. Look out for simple errors in column names like 'name' instead of 'song_name' etc. 4. **Create the Plan:** Write a concise, step-by-step natural language plan that a junior SQL developer can follow to fix the query. **Input for Analysis:** **1. Original Question:** "$question" **2. Relevant Schema:** $schema **3. Failed SQL Query:** $wrong_sql $database_error There IS an error in the query. DO NOT return "no error, query seems fine". Provide a clear, step-by-step explanation of why the query is wrong and exactly how to fix it. Return ONLY the query error and correction plan, don't generate SQL.Correction SQL Agent: Based on the correction plan proposed by the Correction Plan Agent, this agent generates new SQL code. Like the SQL Agent, the SQL code produced by this agent is executed by the database engine. If it fails again, the process returns to the Correction Plan Agent, forming a loop.

You are an expert SQL debugger AI in NL2SQL multiagent framework. Your previous attempt to write a query failed. Your new task is to analyze the feedback and your incorrect query, then generate a new, corrected query after reading the question and analyzing the relevant schema. Question: $question Incorrect SQL: $wrong_sql Correction query plan- You MUST follow these steps to fix the query: $correction_plan $schema Write ONLY the final valid SQL query. Do NOT include commentary or unnecessary characters in the query.

4 SQL-of-Thought Experimental Results

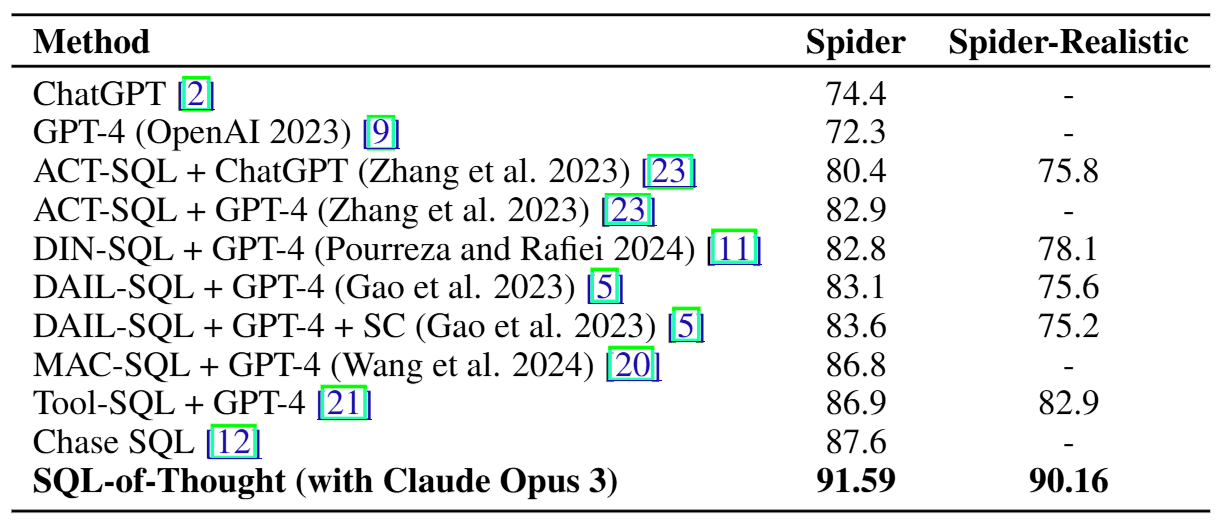

This paper primarily evaluates the performance of SQL-of-Thought using the following two benchmarks:

- Spider: Contains 20 database settings and 1,034 Text-SQL pairs.

- Spider Realistic: Based on the Spider dev set, this benchmark removes explicitly mentioned column names, containing 508 samples.

The experimental results for SQL-of-Thought are shown in the table below:

5 Conclusion

This article introduced the paper “SQL-of-Thought: Multi-agentic Text-to-SQL with Guided Error Correction,” explaining how its multi-agent workflow design enhances performance on Text-to-SQL tasks. Furthermore, we learned from this paper that providing an SQL Error Taxonomy can further improve performance when having an LLM correct SQL code based on execution feedback.