讓 AI 學會自我除錯!深入了解 SQL-of-Thought 如何打造更精準的 Text-to-SQL

1 前言

本篇文章和大家分享 SQL-of-Thought: Multi-agentic Text-to-SQL with Guided Error Correction 論文,該論文於 2025 年 9 月上傳至 arXiv 並被 NeurIPS 2025 的 Deep Learning for Code Workshop 給接受!

論文作者也有開源程式碼於 GitHub,有興趣的讀者可以再自行測試看看!

2 SQL-of-Thought 想解決的問題

本篇論文 SQL-of-Thought 想解決的問題非常直觀:

如何設計一個 Agentic Workflow 來提升 LLM 在 Text-to-SQL 的表現

3 SQL-of-Thought 方法介紹

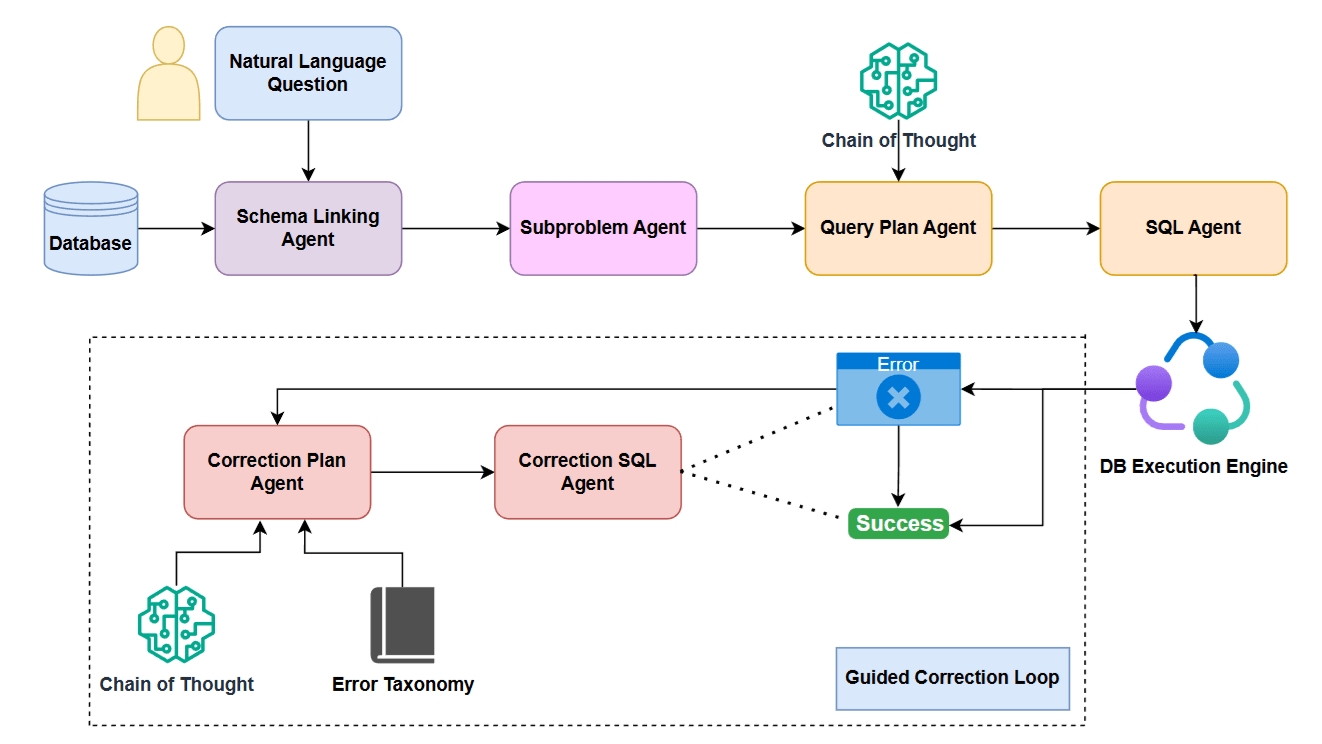

上圖呈現的是完整的 SQL-of-Thought 的 Workflow,接著我們來簡單描述 Workflow 中每一個 Agent 所負責的任務:

Schema Linking Agent: 基於 Question 以及 Database 中 Table Schema,來挑選出與目前 Question 最相符的 (1) Table Schema 與 (2) 每個 Schema 中需要使用到的 Column

You are a Schema Linking Agent in an NL2SQL framework.Return the relevant schema links for generating SQL query for the question." Given: - A natural language question - Database schemas with columns, primary keys (PK), and foreign keys (FK) Cross-check your schema for: - Missing or incorrect FK-PK relationships and add them - Incomplete column selections (especially join keys) - Table alias mismatches - Linkage errors that would lead to incorrect joins or groupBy clauses Question: $question Table Schema: $table_schema Return the schema links in given format: Table: primary_key_col, foreign_key_col, col1, col2, ... all other columns in Table ONLY list relevant tables and columns and Foreign Keys in given format and no other extra characters.Subproblem Agent: 基於 Schema Linking Agent 的輸出,來將原本的 Question 拆解為多個 SQL Subproblem,每個 SQL Subproblem 由一種 SQL Clause (e.g., WHERE, GROUPBY, JOIN, DISTINCT, ORDER BY, HAVING, EXCEPT, LIMIT, UNION) 以及其對應的值來表示

You are a Subproblem Agent in an NL2SQL framework. Your task is to decompose a natural language question into SQL subproblems. You will be provided: - A natural language question - A textual schema summary that lists relevant tables and columns (generated by a Schema Agent) Use this information to infer which SQL clauses are likely needed (e.g., WHERE, GROUPBY, JOIN, DISTINCT, ORDER BY, HAVING, EXCEPT, LIMIT, UNION). Question: $question Schema: $schema Output a JSON object containing a list of subproblems: { "subproblems": [ { "clause": "SELECT", "expression": "..." }, { "clause": "JOIN", "expression": "..." }, ... ] } Only output valid JSON — no markdown, no extra commentary.Query Plan Agent: 基於 Schema Linking Agent 與 Subproblem Agent 的輸出,Query Plan Agent 的任務是基於 Question 來生成 Step-by-Step 的 SQL Plan。 SQL Plan 中的每個項目都是一段不完整的 SQL 語句

You are a Query Plan Agent in an NL2SQL Framework. Using the question, schema info, and subproblems, generate a step-by-step SQL query plan. Use Chain of Thought to think through the process. Question: $question Schema Info: $schema_info Subproblems: $subproblem_json $critic_feedback $subprob_plan Return plan steps with specific table, column names like: 1. FROM tableA 2. JOIN tableB ON tableA.colX = tableB.colY 3. JOIN tableC ON tableB.colZ = tableC.colW Return only the plan (no SQL or extra text).SQL Agent: 基於 Schema Linking Agent, Subproblem Agent 與 Query Plan Agent 的輸出來產生完整正確的 SQL Code。SQL Code 會被 Database Engine 執行後,得到執行結果

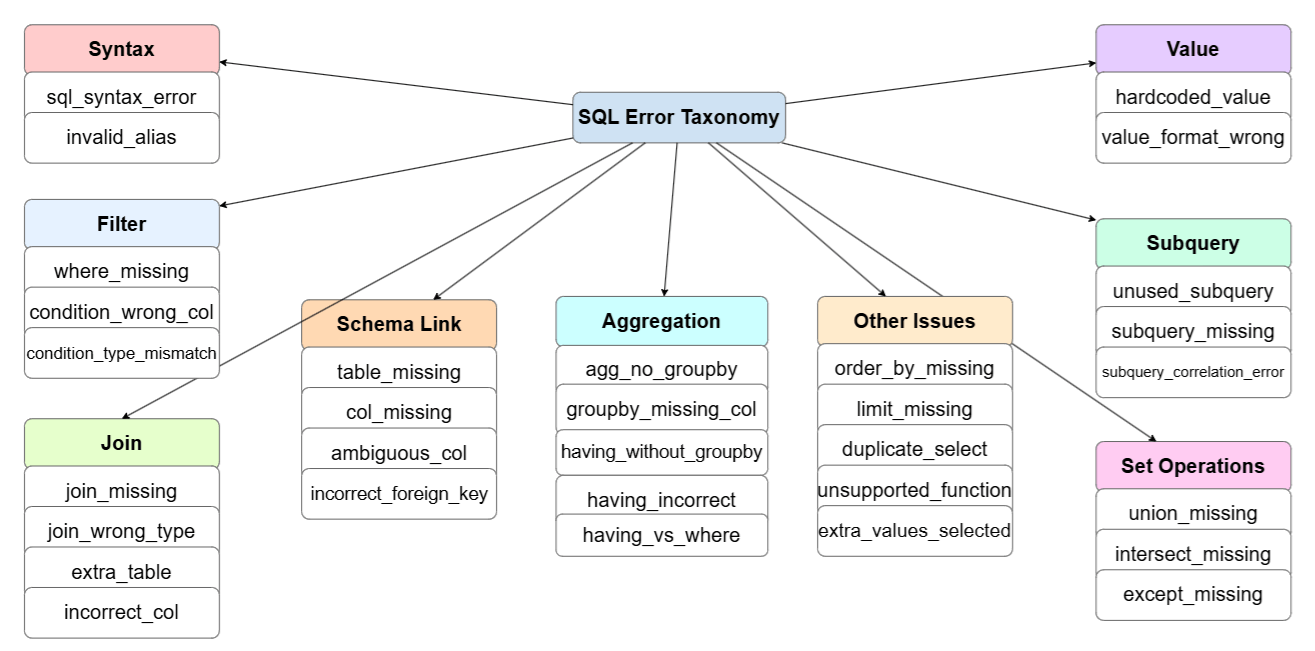

You are a world-class SQL writer AI in an NL2SQL multiagent framework. Your task is to write a single, syntactically correct SQL query that perfectly implements the provided query plan. Pay close attention to the table and column names in the schema. $question Plan: $plan $schema $subprob_sql $critic_feedback Write ONLY the final valid SQL query. Do NOT include commentary or unnecessary characters in the query.Correction Plan Agent: 如果 SQL Code 的執行過程中有 Error 產生,則透過此 Agent 來產生如何修正 SQL 的計畫。值得注意的是,本篇論文特別強調,在這個 Agent 的 Prompt 中不只要要求 LLM 透過 Chain of Thought 的方式來產生修正計畫,還提供 SQL Error Taxonomy,進一步提升此 Agent 的表現。SQL Error Taxonomy 其實就是 SQL Code 中可能出現的 Error 種類,如下圖所示:

SQL Error Taxonomy You are a Senior SQL Debugger in an NL2SQL multiagent framework. Your sole task is to analyze a failed SQL query to create a clear, step-by-step correction plan using Chain of Thought. Do NOT write the corrected SQL yourself. You are an expert in a comprehensive error taxonomy, including categories like: - `schema.mismatch`: The query references tables, columns, or functions that do not exist in the schema, or uses them ambiguously. - `join.logic_error`: Tables are connected incorrectly. This includes missing JOIN conditions, wrong foreign keys, using the wrong columns to join, or including unnecessary tables. - `filter.condition_error`: The WHERE or HAVING clauses are incorrect. This can mean filtering on the wrong column, using the wrong operator or value, or confusing the use of HAVING with WHERE. - `aggregation.grouping_error`: Errors related to aggregate functions like COUNT or SUM. This typically involves a missing or incomplete GROUP BY clause, or incorrect use of HAVING. - `select.output_error`: The final columns being selected are wrong. The query might be returning extra columns, missing required columns, or presenting them in the wrong order. - `syntax.structural_error`: The query has fundamental syntax errors or is missing critical clauses required by the question, such as ORDER BY, LIMIT, or set operators like UNION and INTERSECT. - `intent.semantic_error`: The query is syntactically valid but fails to capture the user's true intent. This includes using incorrect hardcoded values or failing to implement a required subquery or leaving out a logical solution. **Your Reasoning Process:*: 1. **Pinpoint the Mismatch:** Read the question and compare it to the `Failed SQL Query` and the `Pruned Schema` to find the exact source of the error. 2. **Find error type:** Read error taxonomy categories given above and try to identify the error in this query. Analyze the joins, aggregation, distinction, limits and except clauses applied carefully. 3. **Formulate a Hypothesis:** State the root cause of the error in a single sentence. Look out for simple errors in column names like 'name' instead of 'song_name' etc. 4. **Create the Plan:** Write a concise, step-by-step natural language plan that a junior SQL developer can follow to fix the query. **Input for Analysis:** **1. Original Question:** "$question" **2. Relevant Schema:** $schema **3. Failed SQL Query:** $wrong_sql $database_error There IS an error in the query. DO NOT return "no error, query seems fine". Provide a clear, step-by-step explanation of why the query is wrong and exactly how to fix it. Return ONLY the query error and correction plan, don't generate SQL.Correction SQL Agent: 基於 Correction Plan Agent 所提出的修正計畫,實際生成新的 SQL Code。與 SQL Agent 一樣,此 Agent 所產生的 SQL Code 會被 Database Engine 執行,若有錯誤則會回到 Correction Plan Agent 形成一個迴圈

You are an expert SQL debugger AI in NL2SQL multiagent framework. Your previous attempt to write a query failed. Your new task is to analyze the feedback and your incorrect query, then generate a new, corrected query after reading the question and analyzing the relevant schema. Question: $question Incorrect SQL: $wrong_sql Correction query plan- You MUST follow these steps to fix the query: $correction_plan $schema Write ONLY the final valid SQL query. Do NOT include commentary or unnecessary characters in the query.

4 SQL-of-Thought 實驗結果

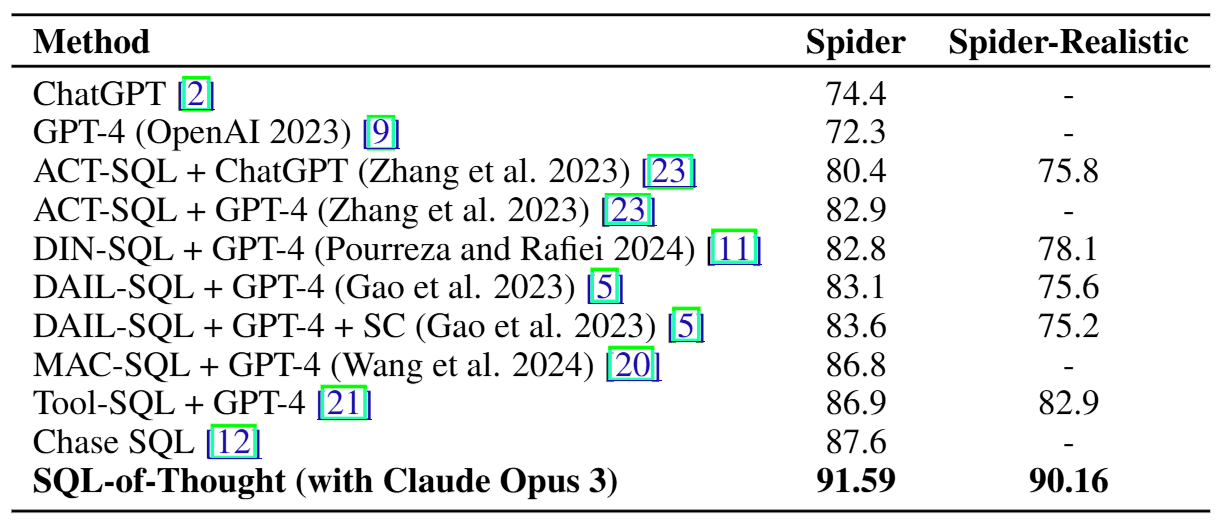

本篇論文主要透過以下兩個 Benchmark 來衡量 SQL-of-Thought 的表現:

- Spider: 包含 20 種 Database 設定以及 1034 個 Text-SQL Pairs

- Spider Realistic: 主要基於 Spider Det Set 將實際提到的 Colume Name 去除,包含 508 個樣本

SQL-of-Thought 實驗結果如下表所示:

5 結語

本篇文章介紹了 SQL-of-Thought: Multi-agentic Text-to-SQL with Guided Error Correction 論文,理解其 Multi-Agent Workflow 的設計如何提升 Text-to-SQL 的表現。此外,從本篇論文中,我們也了解到在讓 LLM 基於 SQL Execution Feedback 修正 SQL Code 時,若提供 SQL Error Taxonomy 可以更進一步提升表現!